Many years ago when I was a team lead in a Finance IT shop I got into the habit of walking around to check on my clients. I popped into Joe’s office and he was doing something odd.

“Morning Phillip”

I notice his screen is flickering in an odd way

“Hi Joe, Whatcha doin’ there?”

“I’m doing my monthly report to the VP”

“I don’t think I’ve seen anything like this, how does it work?”

“Oh this? I run a report on the mainframe and then use this screen scraping tool to pull the data off the report and put it in a spreadsheet.”

“So how long does that take to run?”

“The screen scraping will run about 30 minutes if it doesn’t get tripped up, but then I have to reformat the data and bring data in from another report and do some VLOOKUPS and a little more cleanup, summarizing and formatting”

“Seems like a lot of work, how long does the whole process take?”

“About three days. Two if I run into no problems and I have no distractions”

“And you do this every month”

“Yep, pretty cool huh?”

“What if I could just give you the data in a spreadsheet? Would that speed things up?”

“You can do that?”, he asked incredulously.

“Absolutely”.

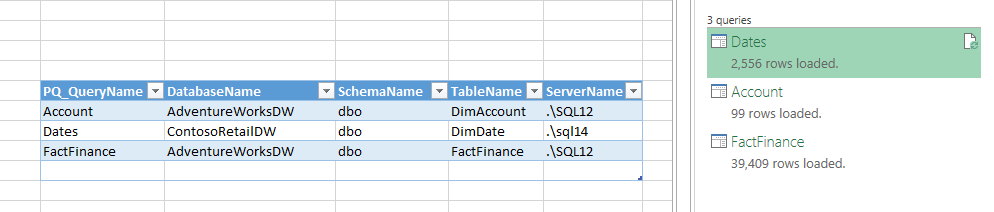

This exchange is what initiated my first data warehouse (using SQL Server 6.5) and I learned some valuable lessons beyond the technical experience I gained. Not only did I make Joe’s life a lot easier, but I discovered that he was dropping about $2 million on average out of his report due to quirks in his process. So not only was he spending too much time on this task, he was also reporting inaccurate data to our vice president. When I solved Joe’s problem, I had the realization that there were probably other coworkers who could use my help who had a similar lack of access to relevant and accurate data in a form they could use.

Joe was the first coworker I encountered like this, but I have found a Joe in every place I’ve worked for any length of time. People in the business, especially in operational roles, will suffer in silence for years believing that the solution they cobbled together is as good as it gets. If you don’t walk out among your coworkers, how can you know what you can do to make positive impacts within your organization? And without regularly interacting with them, how can they know when to ask you for help?

The proliferation of “spreadmarts” and “artisanal spreadsheets”* is a symptom of IT not being responsive to the business. Many IT shops have worked hard to erect processes and barriers between the developer and the business. You need to break down or step around these barriers, for the good of the business because you can’t respond to the business if you are sheltered from it.

Start by walking around departments you generally don’t frequent. Smile. Look for telltale signs of manual data gathering like stacks of reports and highlighters. Another great place to look is in your SSRS execution log. If you see a report that brings back thousands of rows of data and is run regularly, odds are the data is being exported to excel and is being manually married up with other reports via cut and paste and VLOOKUP.

You may find some scary creations. Do not be critical, this is someone’s baby. Sure it’s an ugly baby but someone worked hard to build it and they did the best they could with the tools and knowledge they had available. Just tell them it’s a clever or interesting solution and ask if they would like their design to be automated.

Joe was quite proud of his solution, but it had become tedious, time consuming and sadly, inaccurate. He would rather not spend 3 days each month creating the same report. By showing interest and asking about his process I was able to save him a lot of time and the company a lot of money.

Remember…

WALK AROUND, BE NOSY, BE NICE, BUILD RELATIONSHIPS

*Tip of the hat to Dory Owen for coining the term artisanal spreadsheets